(This article used chatGPT to organize the points and clarify them)

I. MOTIVATION: WHY WAS THIS STUDY DONE?

- Who funded the study?

- Follow the money. Industry-funded studies are significantly more likely to reach favorable conclusions.

- Ask: Would the funder lose money or credibility if the study reached a different result?

- Do the authors or institutions have any conflicts of interest?

- Look for financial ties to corporations, pharmaceutical companies, biotech firms, lobbying groups, or government contracts.

- Repeated publication history in a specific outcome direction is also a sign of narrative alignment.

- What is the stated goal of the study—and what is the real goal?

- Is the stated objective genuine scientific inquiry, or is it subtly designed to defend a product, policy, or institutional decision?

- Does the study aim to discover something new, or protect what is already assumed?

- What alternative hypothesis is being denied space?

- What competing theories, concerns, or explanations are left unmentioned?

- Ask: What isn’t being tested, and why not? Sometimes what is not studied is more revealing than what is.

II. DESIGN: HOW WAS THE STUDY BUILT?

- What was the study type?

- Randomized controlled trial (RCT)? Observational? Retrospective? Meta-analysis?

- Each design has its own blind spots. RCTs may still be biased through design choices. Observational studies may suffer confounding. Meta-analyses can be gamed by selective inclusion.

- Is it an efficacy study or a real-world effectiveness study?

- Efficacy = ideal lab conditions.

- Effectiveness = actual performance in real populations.

- Many products pass efficacy tests but fail real-world tests.

- Was there a control group—and was it valid?

- If “placebo” was used, was it truly inert? (Many “placebos” contain adjuvants or biologically active components.)

- If observational, were controls matched properly (age, sex, socioeconomic status, comorbidities)?

- Was blinding used, and who was blinded?

- Were both participants and researchers unaware of the group assignments?

- No blinding opens the door to expectation bias, consciously or not.

- Was the sample size large enough and relevant to the real world?

- A small study may miss real effects; an overly large one may detect meaningless “statistical” significance.

- Most importantly: Was the population representative of who will receive or be affected by the intervention?

- Was convenience sampling used?

- Were participants chosen because they were easy to recruit (e.g., students, soldiers, hospital employees)?

- Such samples are often healthier, more compliant, and not representative of general populations.

- Were any groups systematically excluded?

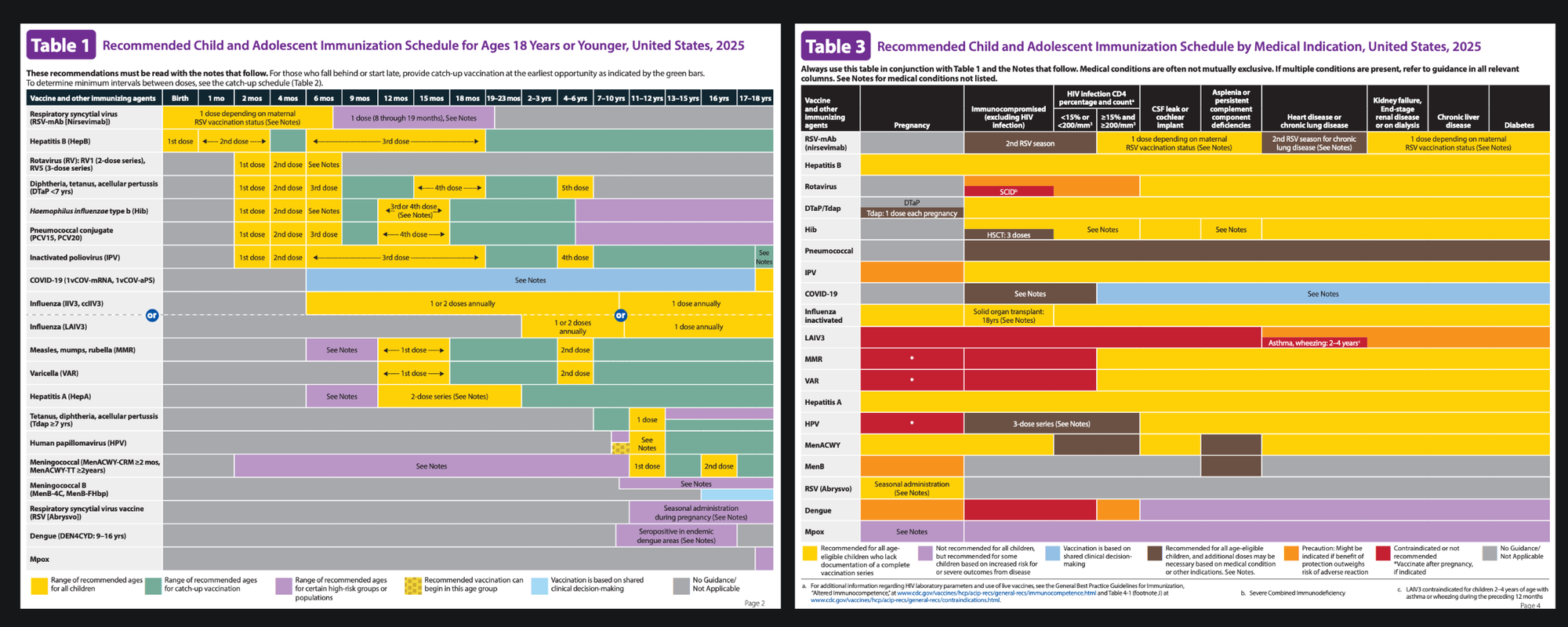

- Were elderly, chronically ill, pregnant, immunocompromised, or previously exposed individuals excluded?

- If so, the safety or efficacy claims do not apply to those groups.

- Were the endpoints relevant to human outcomes?

- Are the endpoints actual measures of health (survival, disease reversal), or proxies (antibodies, lab values)?

- “Increased antibodies” ≠ protection unless real-world benefit is proven.

- How long was the follow-up period?

- Was the duration long enough to observe delayed effects (e.g., cancer, autoimmune, neurological disorders)?

- Many adverse outcomes are missed simply because studies stop too early.

- What outcomes were not measured?

- Were all-cause mortality, hospitalization, and quality of life included?

- Or were inconvenient outcomes left out?

III. STATISTICS: HOW WAS THE DATA HANDLED?

- Was the study powered to detect meaningful effects?

- Did they calculate the required sample size to detect realistic differences?

- Were results presented in relative or absolute terms?

- “95% effective” may mean reducing risk from 1% to 0.05% (an absolute difference of 0.95%).

- Always demand absolute risk reductions to understand real-world impact.

- Were statistical adjustments used—and how many?

- Multivariable adjustment can control for confounding, but it can also obscure real signals.

- Excessive adjustment = data sculpting.

- Were any data excluded as “outliers”?

- Was there transparency in how and why data were removed?

- Sometimes negative results are dismissed under the guise of noise.

- Were subgroup analyses conducted—and were they cherry-picked?

- Looking at 20 subgroups guarantees “significance” in at least one due to chance.

- If no correction for multiple comparisons was applied, it's statistical sleight-of-hand.

IV. LANGUAGE AND FRAMING: WHAT WORDS ARE BEING USED?

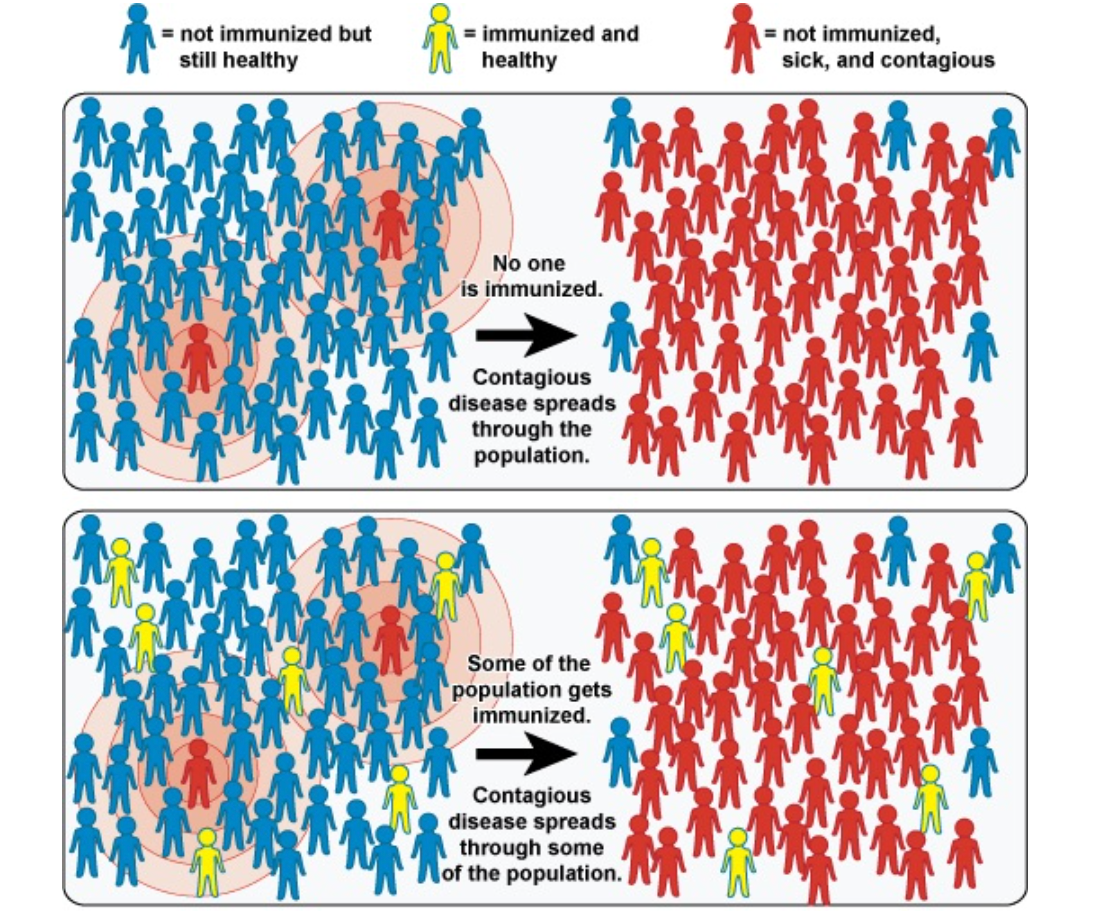

- Is framing by negation used?

- “No evidence of harm” ≠ “evidence of no harm.”

- Such language implies safety when the study may have been underpowered or misdesigned to detect harm.

- Are minimization terms present?

- “Mild,” “transient,” “no significant increase,” “reassuring,” “well-tolerated” — these are subjective qualifiers used to downplay signals.

- Read past them and look at the raw numbers.

- Do the conclusions overreach the data?

- Are the authors making claims beyond what their data support?

- Example: A short-term antibody study concluding “long-term protection” or “safe in pregnancy” without testing either.

- Does the abstract match the body?

- Often, the abstract spins a positive interpretation, while the body shows mixed or negative data.

- Don’t rely on abstracts. Read the entire study.

- Is language used to pathologize concern?

- Watch for labeling of critics or adverse effect reports as “hesitancy,” “misinformation,” or “anti-science,” instead of addressing their arguments directly.

V. PSYCHOLOGICAL AND SYSTEMIC CONTEXT: WHY THIS MATTERS

- Is the study replicable—and has it been replicated?

- One result means little. Have others found the same?

- If replications are missing or contradictory, the original is not robust.

- Is the journal independent or industry-tied?

- Some journals have editorial boards stacked with industry consultants.

- Look for patterns of selective publication, rapid approval, or ghostwriting.

- Were adverse events transparently reported—or buried?

- Were serious outcomes disaggregated, or hidden in broad categories (e.g., “neurological event”)?

- Were outcomes clearly defined, or ambiguous?

- Was all raw data made available for independent review?

- If not, ask why. Transparency is the bedrock of science.

- Secrecy = control = possible fraud or selective omission.

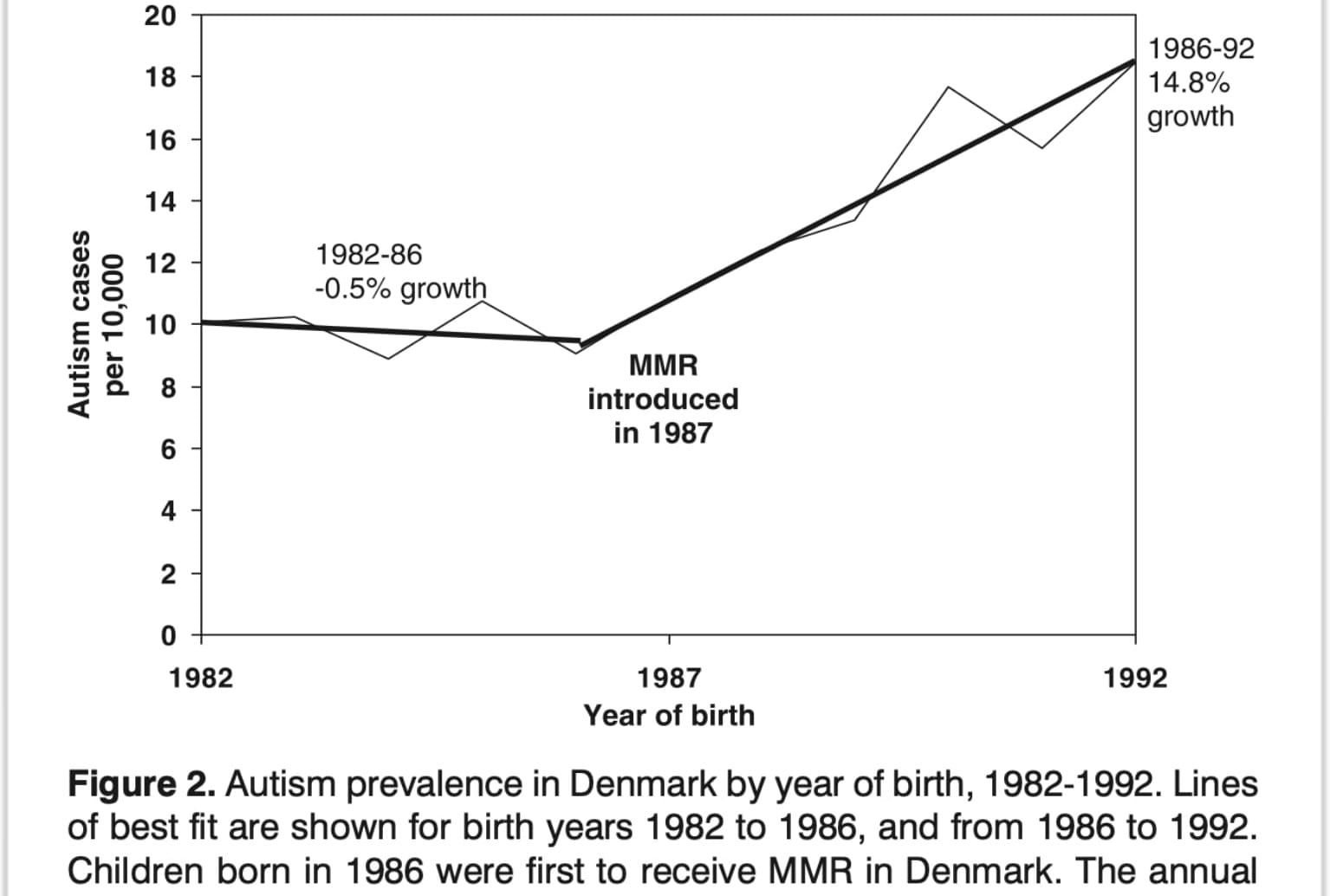

- Were relevant external data ignored?

- Did the study address or cite contradictory findings?

- If there are known adverse signals from real-world surveillance, and these are unmentioned, it signals narrative protection.

- Who benefits if the conclusion is accepted?

- Is there a policy, product, mandate, or narrative that this study upholds?

- Understanding the power structure behind the science is essential for interpretation.

Final Principle: Science ≠ Neutral

Every study is a story told by humans, often within institutions that have agendas, liabilities, markets, and fears. This guide does not mean rejecting all science—it means learning to read it as a system of persuasion as much as a system of discovery.

The question is not just "What does the study say?"

But always: "What does it allow itself to say—and what does it silence?"

Use this framework every time. It applies across disciplines, whether you're reading about a new drug, food additive, medical device, behavioral program, or environmental policy.

This is not skepticism.

This is literacy.

Discussion